[ad_1]

Researchers from Korea develop a brand new picture retrieval system utilizing deep studying algorithms

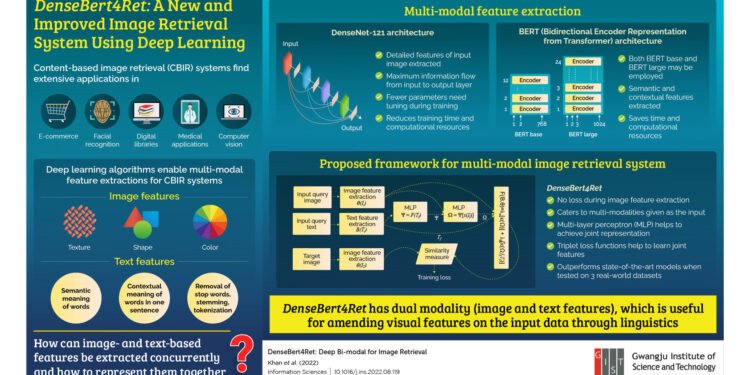

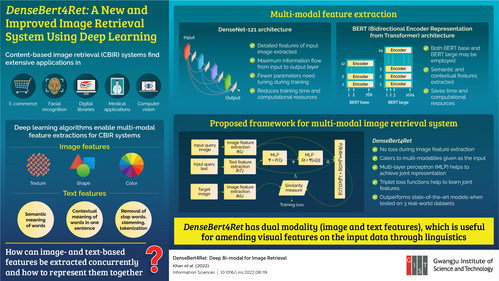

GWANGJU, South Korea, Nov. 9, 2022 /PRNewswire/ — With the quantity of data on the web rising by the minute, retrieving knowledge from it’s like looking for a needle in a haystack. Content material-based picture retrieval (CBIR) methods are able to retrieving desired photographs primarily based on the person’s enter from an in depth database. These methods are utilized in e-commerce, face recognition, medical purposes, and laptop imaginative and prescient. There are two methods through which CBIR methods work—text-based and image-based. One of many methods through which CBIR will get a lift is by utilizing deep studying (DL) algorithms. DL algorithms allow using multi-modal characteristic extraction, that means that each picture and textual content options can be utilized to retrieve the specified picture. Regardless that scientists have tried to develop multi-modal characteristic extraction, it stays an open drawback.

To this finish, researchers from Gwangju Institute of Science and Expertise have developed DenseBert4Ret, a picture retrieval system utilizing DL algorithms. The examine, led by Prof. Moongu Jeon and Ph.D. pupil Zafran Khan, was made out there on-line on September 14, 2022 and revealed in Quantity 612 of Information Sciences. “In our day-to-day lives, we frequently scour the web to search for issues comparable to garments, analysis papers, information article, and so forth. When these queries come into our thoughts, they are often within the type of each photographs and textual descriptions. Furthermore, at instances we could want to amend our visible perceptions via textual descriptions. Thus, retrieval methods also needs to settle for queries as each texts and pictures,” says Prof. Jeon, explaining the group’s motivation behind the examine.

The proposed mannequin had each picture and textual content because the enter question. For extracting the picture options from the enter, the group used a deep neural community mannequin generally known as DenseNet-121. This structure allowed for the utmost circulation of data from the enter to the output layer and wanted tuning of only a few parameters throughout coaching. DenseNet-121 was mixed with the bidirectional encoder illustration from transformer (BERT) structure for extracting semantic and contextual options from the textual content enter. The mix of those two architectures decreased coaching time and computational necessities and shaped the proposed mannequin, DenseBert4Ret.

The group then used Fashion200k, MIT-states, and FashionIQ, three real-world datasets, to coach and evaluate the proposed system’s efficiency in opposition to the state-of-the-art methods. They discovered that DenseBert4Ret confirmed no loss throughout picture characteristic extraction and outperformed the state-of-the-art fashions. The proposed mannequin efficiently catered for multi-modalities that got because the enter with the multi-layer perceptron and triple loss perform serving to to study the joint options.

“Our mannequin can be utilized anyplace the place there may be an internet stock and pictures should be retrieved. Moreover, the person could make modifications to the question picture and retrieve the amended picture from the stock,” concludes Prof. Jeon.

Here is hoping to see the DenseBert4Ret system in software in our everyday-use search engines like google and yahoo quickly!

Reference

Title of unique paper: DenseBert4Ret: Deep bi-modal for picture retrieval

Journal: Data Sciences

DOI: https://doi.org/10.1016/j.ins.2022.08.119

Affiliations:

- Faculty of Electrical Engineering and Laptop Science, Gwangju Institute of Science and Expertise (GIST)

- Nationwide College of Science and Expertise (NUST), Pakistan

- Division of Laptop Engineering, Dankook College

*Corresponding writer’s e mail: [email protected]

In regards to the Gwangju Institute of Science and Expertise (GIST)

Web site: http://www.gist.ac.kr/

Contact:

Chang Sung Kang

82 62 715 6253

[email protected]

SOURCE Gwangju Institute of Science and Expertise (GIST)

[ad_2]

Source link